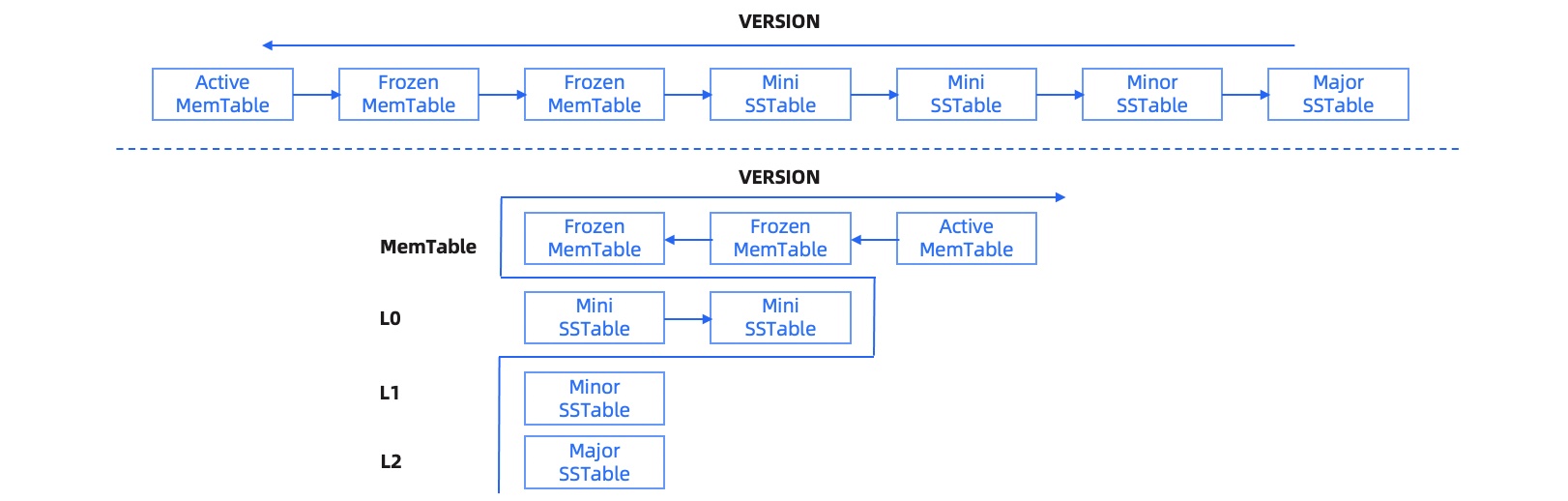

The storage engine of OceanBase Database uses the log-structured merge-tree (LSM-tree) architecture. In this architecture, data is stored in the MemTable and SSTable. When the memory occupied by the MemTable exceeds the specified threshold, data in the MemTable is flushed to the SSTable to release the memory space. This process is called a minor compaction.

Hierarchical minor compactions

In OceanBase Database V2.1 and earlier, only one SSTable is generated by a minor compaction at a time. When the MemTable requires a minor compaction, the data in the MemTable and the generated SSTable is merged. As more minor compactions are performed, the SSTable becomes increasingly large in size, and the amount of data in a single minor compaction also increases. This slows down minor compaction efficiency and thereby causes the memory of the MemTable to be exhausted. Since V2.2, OceanBase Database introduced the hierarchical minor compaction strategy.

Based on specific implementations in the industry and the architecture of OceanBase Database, you can regard hierarchical minor compaction as a tiered-leveled compaction solution. The L0 layer is where size-tiered compactions are performed and split into multiple sublayers based on scenarios. Leveled compactions are performed at the L1 and L2 layers based on the granularity of macroblocks.

Based on specific implementations in the industry and the architecture of OceanBase Database, you can regard hierarchical minor compaction as a tiered-leveled compaction solution. The L0 layer is where size-tiered compactions are performed and split into multiple sublayers based on scenarios. Leveled compactions are performed at the L1 and L2 layers based on the granularity of macroblocks.

L0 layer

The L0 layer is where mini SSTables are placed. The SSTables at the L0 layer may be empty based on the parameter settings of different minor compaction strategies. For the L0 layer, server-level parameters are provided to specify the number of sublayers and the maximum number of SSTables allowed per sublayer. The L0 layer is divided into level-0 to level-n, and the maximum number of SSTables allowed is the same for each sublayer. If the number of SSTables at the level-n sublayer reaches the upper limit, these SStables are compacted into one SSTable and written to the level-n+1 sublayer. If the number of SSTables at the lowest sublayer of the L0 layer reaches the upper limit, a compaction from L0 to L1 is performed to release the memory space. If the L0 layer exists, the frozen MemTables are compacted to generate a new mini SSTable for the level-0 sublayer of the L0 layer. The multiple SSTables at each sublayer of L0 are sorted by base_version. The versions of SSTables involved in subsequent intra-layer or inter-layer major compactions must be adjacent. This way, the SSTables are arranged in order by version, which simplifies the operation logic of subsequent reads and major compactions.

The internal layers of L0 slow down the compaction to L1 and reduce write amplification but cause read amplification. For example, L0 contains n sublayers and m SSTables per sublayer. L0 contains at least (n × m + 2) SSTables. Therefore, the number of sublayers and the maximum number of SSTables allowed per sublayer must be controlled within a reasonable range.

L1 layer

The L1 layer is where minor SSTables are placed. The minor SSTables at the L1 layer are sorted in order by rowkey. When the number of mini SSTables at the L0 layer reaches the threshold, the minor SSTables are involved in the compactions at the L0 layer. L1-layer compactions are scheduled only when the ratio of the total size of mini SSTables at the L0 layer to that of minor SSTables at the L1 layer reaches a specified threshold. Otherwise, compactions are performed only within the L0 layer. This improves the compaction efficiency and reduces the overall write amplification.

L2 layer

The L2 layer is where the baseline major SSTable is placed. The major SSTable is read-only and does not participate in actual compaction operations during routine minor compactions. This ensures that the baseline data is consistent among the replicas.

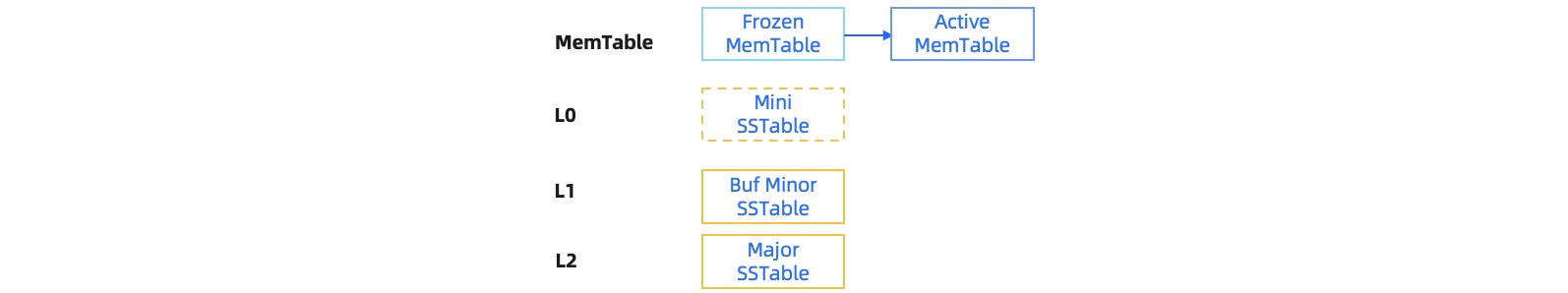

Minor compactions in queuing table mode

Under the LSM-tree architecture, data rows are not directly deleted. Instead, delete operations (tombstones) are written to add Delete marks. If the business table of a user involves frequent insert and delete operations, the absolute data amount may not be large. However, due to the characteristics of the LSM-tree architecture, the incremental data in a table of this type contains a large number of Delete marks. Even if only a small amount of data needs to be scanned, the SSTable cannot quickly identify whether specific data has been deleted and still scans a large amount of data with Delete marks. As a result, the response time of a small query may greatly exceed the expected value. To improve the query performance of this type of table, OceanBase Database provides a customizable table mode called queuing table (or buffer table from the business perspective) that adopts a special minor compaction strategy. You can run the following command to specify the queuing table mode:

obclient> ALTER TABLE user_table table_mode = 'queuing';

OceanBase Database introduces an adaptive buffer table compaction strategy for the queuing table mode. Based on this strategy, the storage layer determines whether to apply the buffer table compaction strategy to a table based on the minor compaction statistics. If the storage layer identifies a table with similar behavior to the buffer table, the storage layer attempts to schedule a buffer minor compaction and generates a buffer minor SSTable based on the major SSTable, the latest incremental data, and the time when the current read snapshot was generated. Such a compaction operation removes all Delete marks from the incremental data. Then, subsequent queries are performed based on the newly generated buffer minor SSTable, avoiding a large number of invalid scan operations.

Minor compaction triggering

A minor compaction can be automatically or manually triggered.

When the usage of the MemTable of a tenant reaches the limit specified by memstore_limit_percentage * freeze_trigger_percentage, a freeze (the preparation for a minor compaction) is automatically triggered. Then, the system schedules a minor compaction.

You can also run the following commands to manually trigger a minor compaction.

Note

memstore_limit_percentagespecifies the ratio of the memory that can be occupied by the MemStore to the total available memory of a tenant. For more information about this parameter, see the topic "System parameters" in OceanBase Database Reference Guide.

ALTER SYSTEM MINOR FREEZE [zone] [server_list] [tenant_list] [replica]

tenant_list:

TENANT [=] (tenant_name_list)

tenant_name_list:

tenant_name [, tenant_name ...]

replica:

PARTITION_ID [=] 'partition_idx%partition_count@table_id'

server_list:

SERVER [=] ip_port_list

Examples:

- Cluster-level minor compaction

obclient> ALTER SYSTEM MINOR FREEZE;

- Server-level minor compaction

obclient> ALTER SYSTEM MINOR FREEZE SERVER='10.10.10.1:2882';

- Tenant-level minor compaction

obclient> ALTER SYSTEM MINOR FREEZE TENANT='prod_tenant';

- Replica-level minor compaction

obclient> ALTER SYSTEM MINOR FREEZE ALTER PARTITION_ID = '8%1@1099511627933';

Note that although you can manually trigger a minor freeze for a single partition, this operation may not effectively release the memory when this partition shares the same memory block with other partitions. To effectively release the memory of the MemTable of a tenant, perform a tenant-level minor freeze.