Basic concepts

Cluster

An OceanBase Database cluster spans one or more regions. A region consists of one or more zones, and one or more OBServers are deployed in each zone. Each OBServer stores replicas of several partitions.

Region

A region refers to a city or a geographic area. If an OceanBase Database cluster spans two or more regions, the geo-disaster recovery capability is implemented for the data and services of the OceanBase Database cluster. If an OceanBase Database cluster spans only one region, the data and services of the OceanBase Database cluster are affected when a city-wide failure occurs.

Zone

A zone generally refers to an IDC with an independent network and power supply. You can deploy one IDC with three replicas if IDC-level disaster recovery is not required. An OceanBase Database cluster that spans two or more zones in the same region provides the disaster recovery capability to ensure high availability when servers in one zone fail.

OBServer

The physical server that runs the observer process. You can deploy one or more OBServers on a physical server. Generally, only one OBServer is deployed on a physical server. In OceanBase Database, each OBServer is uniquely identified by its IP address and service port.

Partition

OceanBase Database organizes user data by partition. Data copies of a partition on different servers are called replicas. The Paxos consensus protocol is used to ensure strong consistency among different replicas of the same partition. Each partition and its replicas comprise an independent Paxos group. One partition is the leader and the other partitions are followers. The leader supports strong-consistency reads and writes, and the followers support weak-consistency reads.

Deployment mode

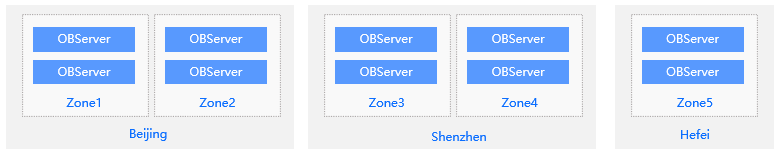

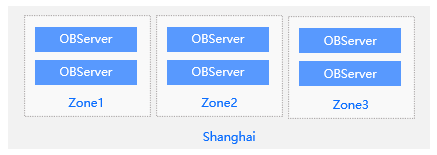

To make the majority of replicas of a partition available when a server fails, OceanBase Database ensures that multiple replicas of a partition are scheduled on different servers. Replicas of a partition are distributed across zones or regions. This ensures both data reliability and database service availability in case of city-wide disasters or IDC failures, achieving a balance between reliability and availability. OceanBase Database provides innovative disaster recovery capabilities in several deployment modes. For example, you can deploy your cluster in five IDCs across three regions to implement lossless disaster recovery for city-wide disasters or in three IDCs in the same region to implement lossless disaster recovery for IDC failures.

Five IDCs across three regions

Three IDCs in the same region

The lossless disaster recovery capability of OceanBase Database also facilitates O&M operations on a cluster. When you replace or repair an IDC or server, you can delete the corresponding IDC or server, replace or repair it, and then add a new one. OceanBase Database automatically replicates and balances partition replicas to avoid impacting the database service.

RootService

An OceanBase Database cluster provides RootService, which runs on an OBServer. When the OBServer on which RootService runs fails, another OBServer is elected for running RootService. RootService provides resource management, load balancing, and schema management.

Resource management

RootService manages metadata of regions, zones, OBServers, resource pools, and resource units. For example, RootService starts or stops OBServers and changes the resource specifications of a tenant.

Load balancing

RootService distributes resource units and partitions across servers, balances leaders among servers, and performs automatic replication or migration to supplement missing replicas during disaster recovery.

Schema management

RootService responds to Data Definition Language (DDL) requests and generates new schemas.

Locality

The distribution and types of replicas of partitions under a tenant in different zones are called locality. When you create a tenant, you can specify its locality to determine the initial types and distribution of replicas of partitions under the tenant. After a tenant is created, you can change the locality of the tenant to change the types and distribution of replicas.

The following statement creates a tenant named mysql_tenant. Replicas of partitions under the tenant in the zones z1, z2, and z3 are full-featured replicas.

obclient> CREATE TENANT mysql_tenant RESOURCE_POOL_LIST =('resource_pool_1'), primary_zone = "z1;z2;z3", locality ="F@z1, F@z2, F@z3" setob_tcp_invited_nodes='%',ob_timestamp_service='GTS';

The following statement changes the locality of mysql_tenant so that replicas of partitions under the tenant in z1 and z2 are full-featured replicas and those in z3 are log replicas. OceanBase Database compares the new locality with the original locality to determine whether to create, delete, or convert the replicas in the corresponding zones.

ALTER TENANT mysql_tenant set locality = "F@z1, F@z2, L@z3";

An OBServer stores only one replica of each partition.

One Paxos replica and multiple non-Paxos replicas of a partition can be distributed in each zone. You can specify non-Paxos replicas in the locality. Examples:

locality = "F{1}@z1, R{2}@z1": indicates that one full-featured replica and two read-only replicas are distributed in z1.locality = "F{1}@z1, R{ALL_SERVER}@z1": indicates that one full-featured replica is distributed in z1 and read-only replicas (optional) are created on other servers in z1.

RootService creates, deletes, migrates, or converts replicas to make the distribution and types of replicas meet the specified locality.

Primary zone

You can set tenant-level configuration items to distribute the leaders of partitions under a tenant across specified zones. In this case, the zones in which the leaders are located are called the primary zone.

The primary zone is a collection of zones. Zones separated by semicolons (;) have different priorities. Zones separated by commas (,) have the same priority. RootService preferentially schedules leaders to zones with higher priorities based on the specified primary zone and distributes leaders on different servers in zones with the same priority. If no primary zone is specified, all zones under a tenant are considered to have the same priority. RootService distributes the leaders of partitions under the tenant on servers in all zones.

You can set tenant-level configuration items to specify or change the primary zone of a tenant.

Example:

Specify a primary zone when you create a tenant. Zone priorities:

z1=z2>z3.obclient> CREATE TENANT mysql_tenant RESOURCE_POOL_LIST =('resource_pool_1'), primary_zone = "z1,z2;z3", locality ="F@z1, F@z2, F@z3" setob_tcp_invited_nodes='%',ob_timestamp_service='GTS';Change the primary zone of a tenant. Zone priorities:

z1>z2>z3.obclient> ALTER TENANT mysql_tenant set primary_zone ="z1;z2;z3";Change the primary zone of a tenant. Zone priorities:

z1=z2=z3.obclient> ALTER TENANT mysql_tenant set primary_zone =RANDOM;

Note

The primary zone provides reference for selecting a leader. Whether a replica of a partition in the corresponding zone can become a leader also depends on the replica type and log synchronization progress.