Beyond Fine-tuning: Solving DABstep's Hard Mode with Versioned Assets

How OceanBase implemented a Train-Free GRPO mechanism to achieve SOTA on real-world financial reasoning

We are witnessing a paradox in the LLM landscape. While models are becoming reasoning giants, their deployment in high-stakes environments—financial analysis, risk control, and DevOps—often hits an invisible wall. The model seems to "understand" the prompt, yet the SQL it generates misses subtle business logic, the Python script it writes crashes on dirty data, and its definition of metrics drifts between conversations.

This reality was quantified in February 2025 by Adyen and Hugging Face with the release of Data Agent Benchmark for Multi-step Reasoning (DABstep). Derived from 450+ real-world financial tasks, it exposes the gap between academic benchmarks and production reality. The result? Even top-tier reasoning models like o3-mini and DeepSeek-R1 hovered around 13%-16% accuracy on the "Hard" subset.

However, on the DABstep Global Leaderboard, OceanBase’s DataPilot agent has secured the top spot, maintaining a significant lead over the runner-up for a month. The secret to our SOTA results wasn't a larger model or more GPU hours. It was a fundamental shift in engineering paradigm: moving from "Prompt Engineering" to "Asset Engineering."

This post details how we built a agent platform that actually resolves the agent gap in real-world financial reasoning.

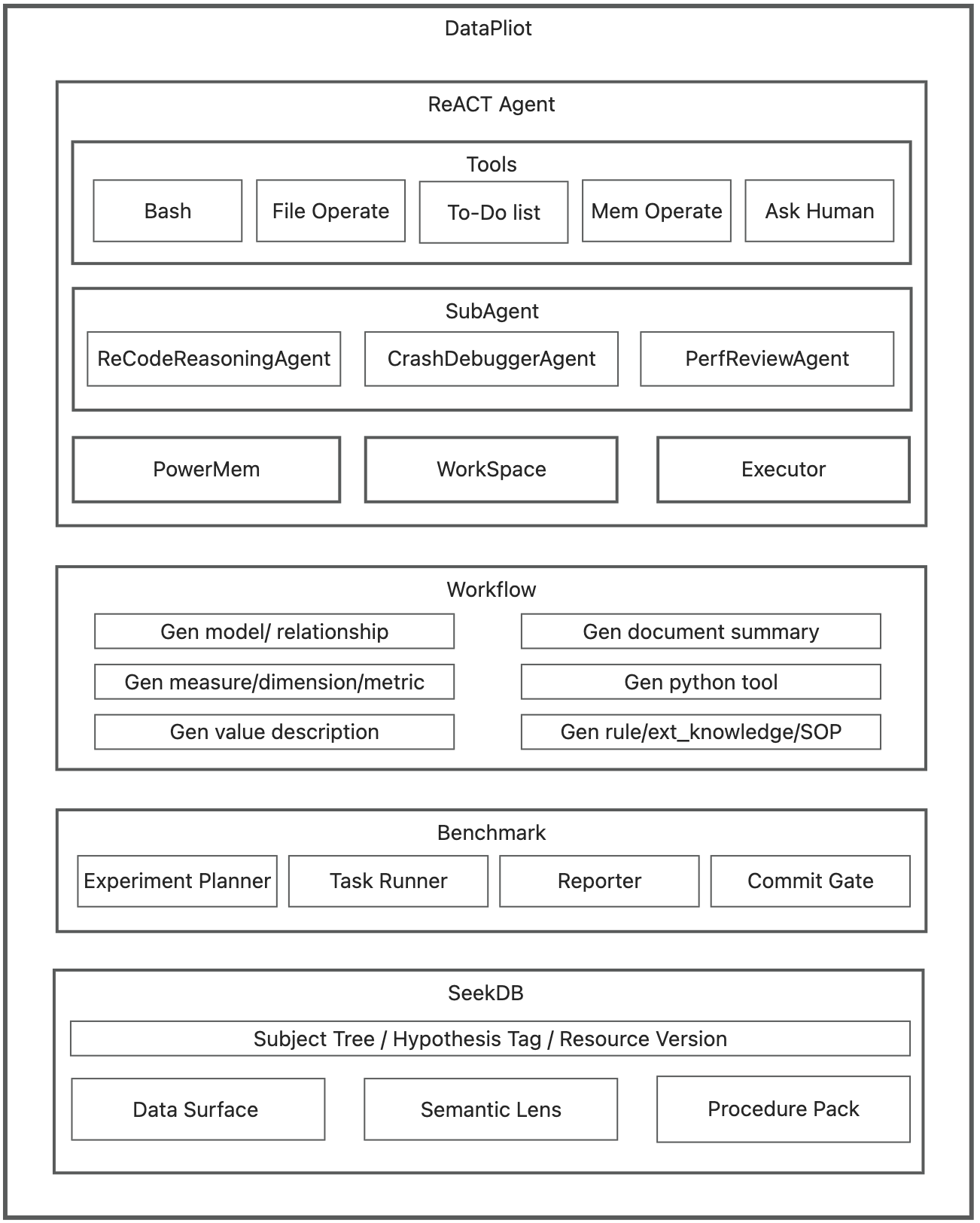

What is OceanBase DataPilot?

Before diving into the mechanics, it helps to understand the platform: DataPilot is OceanBase's intelligent NL-to-SQL and insight platform. It allows developers to build specific "analysis domains," automates the configuration of semantic layers, and provides natural language-driven analytics. DataPilot wraps these capabilities in granular permission controls and audit trails, moving beyond a simple chatbot to a governed data workspace.

The Challenge: Why "Hard Mode" is Just "Real Mode"

DABstep reveals three distinct constraints that break standard Text-to-SQL or RAG approaches in production:

- Ambiguity: The "Metric" Problem. A user asks for "domestic transactions." The database schema has

issuer_country,acquirer_country, andip_country. A standard model guesses based on probability. In finance, guessing is fatal. - Dirty Data: In the wild, an empty value isn't just

NULL. It’sNone,[],"", or"N/A". Generated Python scripts often lack the defensive programming to handle these, causingKeyErrororTypeErrorcrashes. - Performance Constraints: An

O(R * T)(R= number of rules, T= number transactions) nested loop might work on a CSV sample but times out on millions of transaction rows. - To solve this, we realized we couldn't rely on the model's intrinsic training. We needed a deterministic, external memory structure.

The Solution: From Ephemeral Context to Versioned Asset

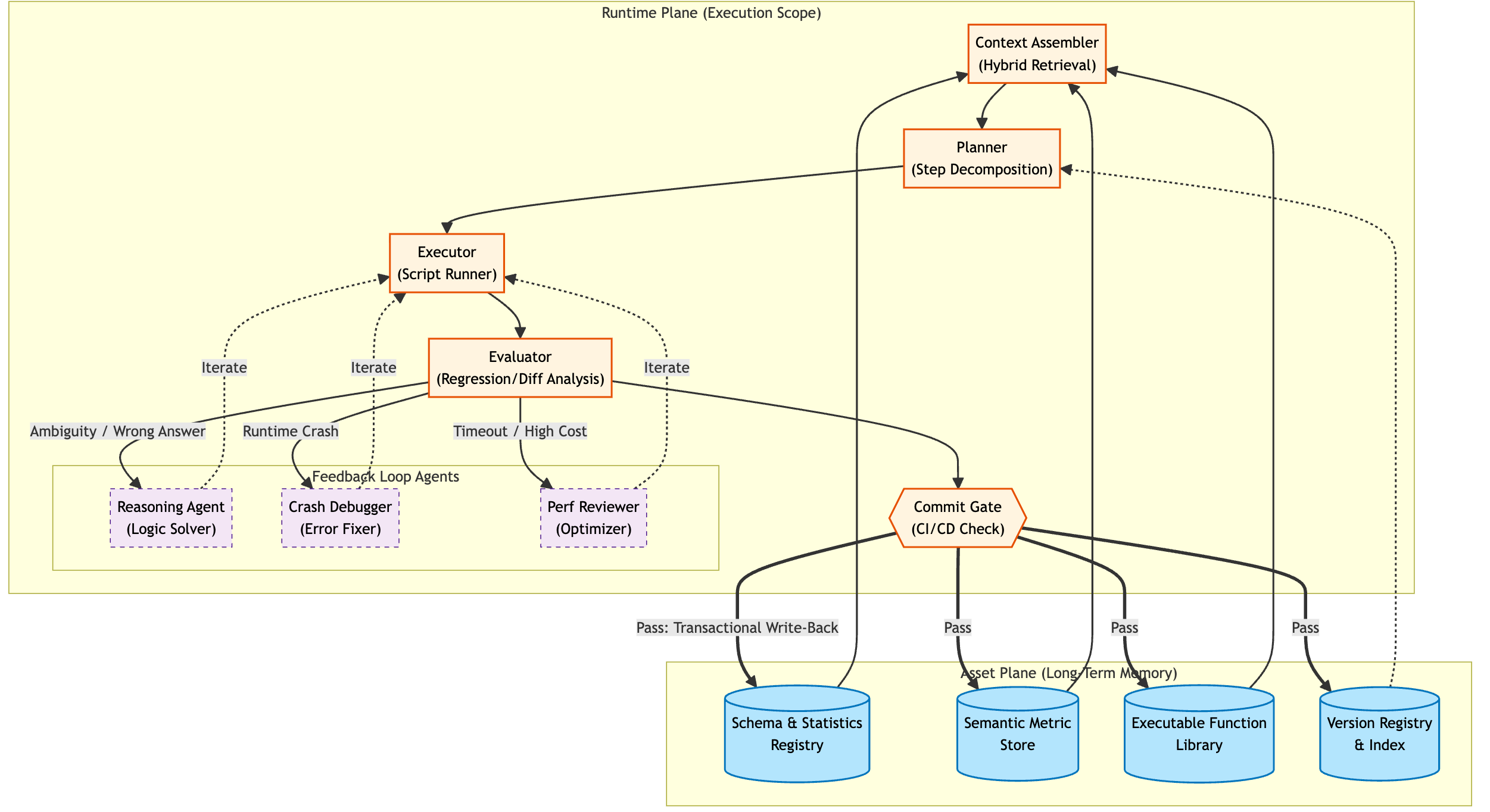

To bridge the gap between probabilistic models and deterministic business requirements, we architected DataPilot around a strict separation of concerns: the Asset Plane and the Runtime Plane.

- The Asset Plane (Long-Term Memory): Think of this as a curated Knowledge Base & Toolbox. It stores versioned Standard Operating Procedures (SOPs), validated code templates, and historical debugging logs. It is static, governed, and searchable.

- The Runtime Plane (Execution Scope): Think of this as a Just-in-Time (JIT) Assembly Factory. It does not store knowledge; instead, it dynamically selects the right tools from the Asset Plane based on the specific incoming task, assembles a temporary execution context, and runs the job.

Architecture Breakdown

This diagram maps DataPilot’s Asset Loop into four executable modules:

- Top Layer: Unified ReACT Agent

The single entry point for both Build and Runtime phases. It orchestrates tool execution and sandbox environments. When facing ambiguity, it triggers sub-agents to generate competing Hypothesis Implementations (e.g., multiple logic variants for a single metric).

- Middle Layer: Structured Workflow

The bridge between conversation and code. It structures loose outputs—conclusions, definitions, and SOPs—into formal resources. Crucially, it applies strict metadata to every asset:

- Subject Tree: For domain organization.

- Hypothesis Tag: To identify experimental branches.

- Resource Version: For Git-like traceability.

- Bottom Layer: OceanBase (Hybrid Storage)

The engine responsible for storage and retrieval. It supports dual-mode access: Precise Recall via tags (to fetch specific versions) and Semantic Assembly via vector search (to build open-ended context).

- Benchmark Loop: The "Branch-to-Trunk" Mechanism

This component treats Hypothesis Tags as A/B test groups. It executes batch evaluations across different logic branches, calculates a success score, and automatically promotes the winning context to the Trunk (Default Version), ensuring the system self-optimizes over time.

Instead of treating context as disposable text found via RAG, we manage it like versioned code.

The system operates as a continuous loop: Assets are retrieved to form a context, the agent executes the task, and the result is evaluated. Only successful patterns are written back to memory.

The 3-Layer Asset Structure

We structure knowledge into three distinct layers to handle specific types of failure modes:

Layer 1: Schema & Statistics Registry (The "Data Surface")

- Purpose: Grounding the model in physical reality to prevent hallucinations.

- Artifacts: Dictionaries, sparsity rates, value ranges, and anti-pattern warnings.

- Example: Explicitly registering a warning: "The field

Countryis misleading; it actually contains IP addresses, not ISO code"

Layer 2: Semantic Metric Store (The "Business Logic Layer")

- Purpose: Eliminating ambiguity via fixed definitions

- Artifacts: Metric calculation formulas, granularity constraints, and scope definitions.

- Example: Hard-coding the rule: "Domestic Transaction =

issuer_country==acquirer_country, ignoring IP."

Layer 3: Executable Function Library (The "Robustness Primitives")

- Purpose: Ensuring code execution stability.

- Artifacts: Verified code snippets, error-handling wrappers, and vectorized operations.

- Example: A standard library of functions that handle dirty data (e.g., safe_in), which the agent must import rather than rewrite.

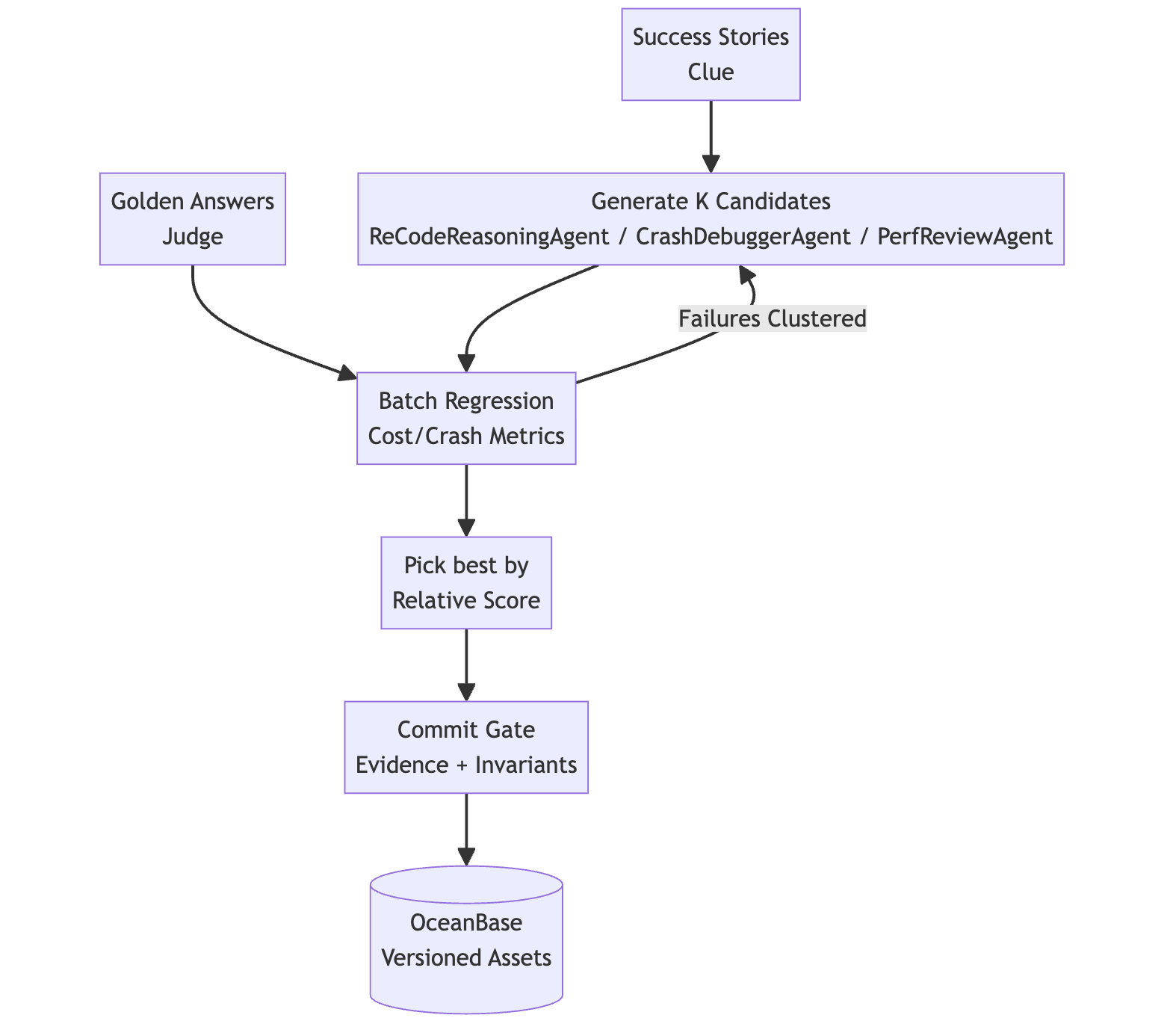

The Implementation: Train-Free Asset Iteration (GRPO for Context)

As the core of our engineering strategy, we adapted the Group Relative Policy Optimization (GRPO, normally used for model training)—and applied it to Context Engineering rather than model weights.

In standard GRPO, you generate a group of outputs, score them, and update the model's neural parameters. In our Train-Free approach, we update the Asset Plane. This allows the system to "learn" from its mistakes instantly without expensive re-training cycles.

The Feedback Loop: Branch, Bench, Promote

The iteration cycle follows a strict "Branch Generation — Tagged Evaluation — Trunk Promotion" pattern:

1. Branch Generation

When the ReACT Agent encounters ambiguity or missing logic, it explicitly forks the execution path. Sub-agents (like the ReCodeReasoningAgent) generate multiple candidate implementations. These variants are written to seekdb as structured assets, strictly versioned via Subject Tree and Hypothesis Tag metadata.

2. Tagged Control Evaluation

The Benchmark engine executes a controlled A/B test. It runs the identical regression suite against each Hypothesis Tag. By injecting specific tags during the retrieval phase, we isolate the variable: same task, same logic, different context. This produces an explainable, comparative score for every branch.

3. Trunk Promotion

Based on multi-objective metrics (Accuracy, Robustness, Cost), the system selects the optimal branch. The associated assets are promoted to the Default Trunk, making them the standard for future queries. Lower-scoring branches are archived—inactive but retained for traceability and rollback.

To prevent "brain damage" (regressions) in the long-term memory, the Commit Gate strictly enforces three invariants before any write to OceanBase.

- Correctness Invariant: The new asset must pass the regression suite (non-negotiable).

- Contract Invariant: Output formats (JSON structure, null semantics) must remain consistent.

- Cost Invariant: Memory usage and execution latency must stay within defined thresholds.

Three Evolutionary Loops: Solving the "Real-World" Triad

We apply this methodology to solve the three specific pain points identified in DABstep.

Loop 1: The Logic Loop (Solving Ambiguity)

- Problem: A table has issuer_country, acquirer_country, and ip_country. The user asks for "domestic transactions." The model guesses wrong 50% of the time.

- Fix: The system generates 3 hypothesis definitions (H1, H2, H3) using different field combinations. It runs them against the "Golden Answer."

- Asset: The winning definition (issuer == acquirer) is solidified into the Semantic Metric Store.

- Result: Future queries don't guess; they look up the definitive rule. Implicit "common sense" is turned into explicit configuration.

Loop 2: The Robustness Loop (Solving Crashes)

- Problem: Real-world data is dirty, which crashes scripts.

- Fix: The system collects crash logs, clusters them by error type, and generates a generic utility function to handle the edge case.

- Asset: A set of defensive primitives (e.g., a safe_in function that gracefully handles nulls and type mismatches) is added to the Executable Function Library.

- Result: Future scripts automatically import these robust tools instead of rewriting fragile logic, effectively preventing distinct queries from failing on the same data quality issues.

Loop 3: The Efficiency Loop (Solving Timeouts)

- Problem: An agent writes a nested loop to match rules against transactions, timing out on 1M rows.

- Fix: The Performance Reviewer Agent identifies the hotspot. It proposes a vectorized alternative (using Pandas

mergeor SQLJOIN). - Asset: The optimized code pattern is stored as a "High-Volume Variant" in the library.

- Result: When the Planner detects a large dataset, it automatically retrieves the high-performance variant, avoiding the performance trap.

These three loops reveal the essence of the DataPilot architecture: we stop the LLM from reasoning from scratch.

By solidifying "What data looks like" (Layer 1), "How business is defined" (Layer 2), and "How code is executed" (Layer 3) into assets, we ensure that every new query starts with a highly informed context.

The Implementation Pattern

Crucially, this system generalizes:

- A disambiguation pattern learned for "Domestic Transactions" is automatically reused for "Cross-border Transactions."

- A robustness primitive like

safe_inestablishes a pattern for handling other edge cases, such as division-by-zero or type casting errors. - An optimization strategy for nested loops becomes a standard architectural pattern for all future large-volume queries.

Ultimately, our top ranking on DABstep isn't because our model is smarter; it's because we built a mechanism to make the model smarter. Through three-layer asset management, we transform implicit reasoning into explicit rules. Through "Success Stories" and "Golden Answers", we persist ephemeral success into long-term memory. And through Hybrid Search and Transactional Write-back, we systematize fragmented knowledge. The result is a system that doesn't just answer a single question correctly—it learns how to solve a class of questions.

The Infrastructure: Why We Need "ACID for Agents"

Managing this architecture requires more than a simple Vector Database. A fragmented stack (MySQL for metadata + Pinecone for vectors + Redis for cache) introduces a fatal flaw for Agents: State Drift.

If you update a metric definition in the vector index but fail to update the corresponding SQL schema in the relational DB, the Agent hallucinates.

We utilized OceanBase because it offers a unified engine for Relational Data + Vector + Text, with the following benefits:

Unified Multi-Model Architecture

Startups often stitch together a "Franken-stack" (e.g., MySQL for metadata, Elasticsearch for docs, Milvus for vectors). This causes latency and consistency nightmares. OceanBase is a converged engine that handles relational tables, unstructured text, and vector embeddings in a single process. This ensures physical consistency across all data modalities without network overhead.

Native Hybrid Search via SQL

For an agent, context retrieval is never just vector similarity; it’s a complex composite query. OceanBase unifies various data types (relational, vector, text, JSON, and GIS) within a single engine and allows developers to combine vector search, full-text search and relational query in a single SQL statement. It eliminates complex application-level merge-sorting, achieving millisecond-level latency for context assembly.

Transactional "Memory" Commit (ACID)

Updating an Agent's long-term memory is a multi-step operation: updating the registry, storing the new code snippet, and logging the regression evidence. If these happen in separate systems, a partial failure leads to a "corrupted state" (e.g., the index points to code that doesn't exist). OceanBase enforces ACID transactions on knowledge updates. Either the entire knowledge package acts atomically, or it rolls back completely, ensuring the Agent's memory is always in a valid, traceable state.

Conclusion: The Shift to Asset Engineering

The DABstep results prove a critical lesson for the industry: in specialized, high-stakes domains, generalization comes from Architecture, not just Scaling.

OceanBase’s victory validates three specific technical decisions that transform the probabilistic nature of LLMs into a deterministic engineering workflow:

- Database over Files: Managing knowledge assets with ACID transactions and indices rather than loose file systems.

- Regression over Eval: Driving iteration through automated pass/fail tests rather than subjective human review.

- Hybrid Search over Vector-Only: Using a single SQL engine to deterministically assemble Facts (Relational), Rules (Full-text), and Experience (Vector) into a reproducible context.

We are currently integrating this entire "Agent Engineering" stack—including the 3-Layer Asset Manager, Hybrid Search Engine, and Automated Regression Runner—directly into the upcoming release of OceanBase DataPilot. Stay tuned.