From Complex to Simple: How We Built seekdb for the AI Era

Since writing the first line of code for OceanBase in 2010, we have been obsess ed with one ambitious goal: building the ultimate general-purpose relational database. Over 15 years, we added Paxos-based consistency, MySQL compatibility, HTAP capabilities, and massive distributed scalability. We built a system that is incredibly powerful, but admittedly, increasingly complex.

But in 2022, the world shifted.

The explosion of AI didn't just change user expectations; it changed how we build software. We realized that the AI era doesn't need another heavy, complex enterprise database. It needs agility. It needs flexibility.

So, we did something counter-intuitive: We started stripping the complexity away.

We went back to the drawing board to understand what an AI application actually needs from a database. Our answer is OceanBase seekdb (hereinafter referred to as seekdb).

What We Saw Developers Struggling With

As we watched the AI wave crash over the industry, we kept seeing the same problems in every architecture diagram and every post-mortem.

The Data Silo Explosion

It's not just rows and columns anymore—it's JSON, vectors, text, GIS, and more. Developers are forced to stitch together multiple specialized engines (one for metadata, one for docs, one for vectors) and hope they behave like a single system. It is fragile and complex.

The Freshness vs. Scale Trade-off

Most vector solutions force a choice: speed or consistency. You either get scale with "eventual consistency" (stale RAG results), or you get freshness but hit a low performance ceiling. OceanBase solved this for TP/AP workloads; we believed AI needed the same guarantee—vectors must be queryable the millisecond they are written, without sacrificing distributed scaling.

The "Glue Code" Tax

A typical AI stack is a Frankenstein monster: Query MySQL, query Elasticsearch, query Pinecone, then write 500 lines of Python to merge and re-rank the results. This is generic infrastructure logic masquerading as application code. It bloats your codebase and slows down development.

Operational Fatigue

MongoDB, Redis, MySQL, Vector DBs—each has its own API, connection pool, and failure modes. If building a simple AI app requires managing three distinct clusters and learning three different query languages, the infrastructure has failed the developer.

The Non-Negotiable Traits of a Modern AI Database

To solve this, we defined what the data infrastructure must look like for the AI era:

- Multi-Modal Native: Treats vectors, JSON, text, and scalars as a unified whole, not distinct silos.

- Uncompromising Performance: Delivers high ingestion throughput and low-latency inference with zero data lag.

- Intelligent Primitives: Moves "brain" functions (embedding, reranking, completion) inside the DB engine.

- Developer Simplicity: Developer-first, not infra-first. Lightweight, instant to start, and invisible to manage.

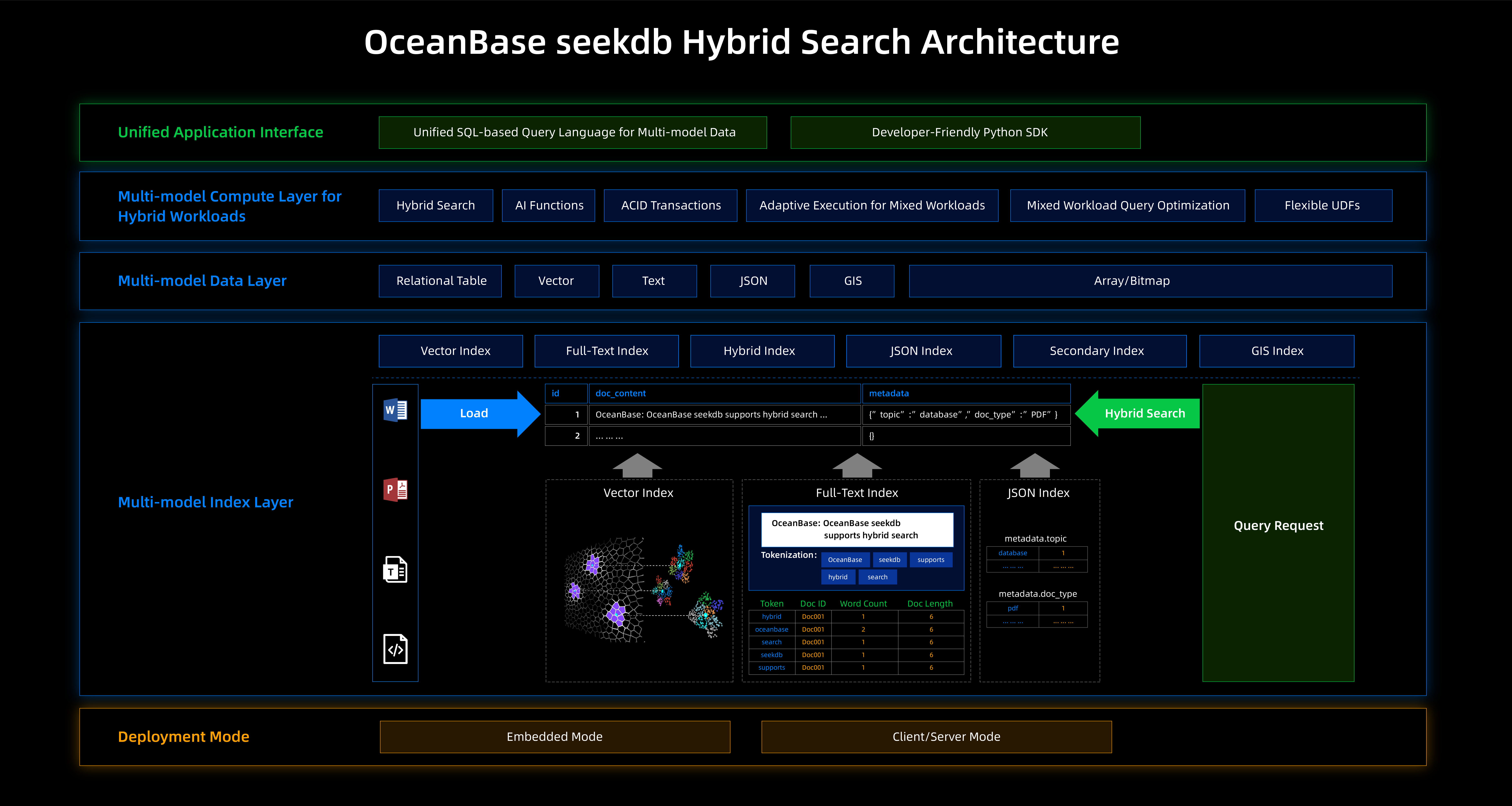

Meet OceanBase seekdb

seekdb is an AI-native hybrid search database open-sourced under Apache 2.0. It isn’t a patch on top of OceanBase; it’s a rethinking of the engine to solve the engineering challenges above.

Unified Multi-Modal Storage. No More Silos

seekdb supports scalar, vector, text, JSON, and GIS data in a single engine.

Crucially, all indexes exist in the same transaction domain. When you update a row, the scalar, vector, and full-text indexes update synchronously. There is no "inconsistency window" common in architectures that rely on CDC (Change Data Capture) to sync data between a SQL DB and a Vector DB.

True Hybrid Search. Single Query

For real applications, you almost never run pure vector search or pure keyword search. You do both, plus filters. seekdb supports doing all of this in one query pipeline:

- Scalar Filtering: Narrows the scope from millions to thousands.

- Coarse Sorting: Uses Vector + Full-text search (low resource cost).

- Reranking: Applies high-precision re-scoring.

You can tune the weights via the DBMS_HYBRID_SEARCH package. If you care more about exact keyword matches, boost the full-text weight. If you need semantic understanding, boost the vector weight.

AI Inside. Document-In, Data-Out

AI is built into the database, not bolted on from the outside.

Out of the box, seekdb embeds a small model for generating vector representations. For many use cases, you can completely ignore vector management—you work with text, and the system handles the embeddings. When you need higher quality, you can easily plug in external models (like OpenAI).

We currently expose four core AI functions directly in SQL:

- AI_EMBED: Turn text into vector embeddings.

- AI_COMPLETE: Text generation with templated prompts.

- AI_RERANK: Sort text results with a reranker model.

- AI_PROMPT: Assemble prompt templates and dynamic data into JSON.

Developer Simplicity: Light, Fast, and Flexible

We designed seekdb to fit into your workflow, not disrupt it. Whether you are hacking on a laptop or deploying to a cluster, the experience should feel frictionless.

Flexible Deployment

seekdb supports two primary modes so you can start small and scale without changing databases:

- Embedded Mode: A lightweight library that lives directly inside your application via pip install. Perfect for rapid prototyping, CI/CD pipelines, or edge devices.

- Server Mode: The recommended path for production. It remains incredibly efficient—we have cleared the VectorDBBench production-grade bar running on a minimal 1C2G spec.

Hassle-Free Schemaless API

We know you don't want to write complex DDL just to test an idea. That's why we expose a Schemaless API for rapid iteration.

You simply create a collection and upsert documents. We handle the rest—automatically creating the schema, generating embeddings, and building the vector and full-text indexes in the background.

import pyseekdb

client = pyseekdb.Client()

collection = client.get_or_create_collection("products")

# Auto-vectorization and storage

collection.upsert(

documents=[

"Laptop Pro with 16GB RAM, high-speed processor",

"Gaming Rig with RTX 4090 and 1TB SSD"

],

metadatas=[

{"category": "laptop", "price": 1200},

{"category": "desktop", "price": 2500}

],

ids=["1", "2"]

)

# Hybrid Search: Vector semantic + SQL filter + Keyword match

results = collection.query(

query_texts=["powerful computer for work"], # Vector search

where={"price": {"$lt": 2000}}, # Relational filter

where_document={"$contains": "RAM"}, # Full-text search

n_results=1

)

Want full control? You can always drop down to standard SQL to fine-tune schemas and indexing strategies whenever you need to.

SQL-Native, Battle-Tested Engine

seekdb inherits the core engine capabilities from OceanBase:

- Real-time writes and real-time reads.

- Strict ACID transactions.

- MySQL compatibility, so existing applications can migrate without rewriting everything.

Data Stack Comparison: Why seekdb?

We didn't build seekdb to win a feature checkbox war. We built it to eliminate the "Frankenstein" architectures that plague AI engineering teams.

Here is how the unified approach compares to the two most common patterns we see in production today.

| Feature | The "Classic" (SQL + ES/Solr) | The "Specialist" (SQL + Vector DB) | OceanBase seekdb (Unified AI-Native DB) |

| The Stack | Three Systems: Relational DB + Search Engine + Vector Store. | Two Systems: Relational DB + Dedicated Vector DB. | One System: All-in-one SQL Engine. |

| Data Consistency | Weak/Eventual. Relying on CDC pipelines introduces lag (ms to seconds). | Disconnected. No transactional guarantee between your business data and vectors. | Strict ACID. Scalars, vectors, and text indexes update atomically. Zero lag. |

| Search Capability | Siloed. Great full-text, but vector search is often an afterthought. | Lopsided. Great vector search, but full-text support is usually weak. | True Hybrid. Native Vector + Full-Text + Scalar filtering in one pipeline. |

| Dev Experience | Ops Heavy. 3x config, 3x monitoring. Debugging sync is a nightmare. | Glue Code Heavy. Complex app logic required to join and rank results. | App Focused. Logic is pushed down to the DB. You write SQL, not plumbing. |

Built for What You're Building Now

We aren't just building this in a vacuum. We are powering real scenarios:

- RAG & Knowledge Retrieval: Hybrid search is the backbone of modern knowledge bases. Our reference app, MineKB, delivers top-10 hybrid search results on 10,000 document chunks in just 15ms—80x faster than traditional stacks.

- Agentic Memory: Agents need persistent memory to remember user history across sessions. We built PowerMem on seekdb to solve this, hitting SOTA on the LOCOMO benchmark while slashing context token usage by 96%.

- AI Coding Assistants: Code search is effectively specialized RAG that requires parsing and indexing massive repositories rapidly. seekdb fits this pipeline naturally, and we are currently optimizing these high-complexity retrieval flows with major AI coding teams.

- MySQL AI Upgrades: For teams on MySQL, seekdb offers a smooth path forward without rewriting your app. You retain full SQL compatibility and transactional strength while unlocking native hybrid search and AI functions.

- Edge-to-Cloud Intelligence: Since seekdb runs as both an embedded library and a server, you can deploy the exact same code logic on edge devices and the cloud.

We Are Just Getting Started

seekdb is young. It’s not perfect, but it represents a new direction: a database that adapts to the developer, not the other way around. We have stripped away the complexity so you can focus on the intelligence of your application, not the plumbing of your data.

Our immediate roadmap focuses on:

- Lower Footprint: Optimizing to run the full VectorDBBench suite stably on a 1C1G instance.

- Mac Native: Ensuring a smooth, native experience for local development on Apple Silicon.

- Ecosystem: Deeper integration with LangChain, LlamaIndex, and JavaScript SDKs.

Try It Out

We need your feedback to make seekdb better. Break it, test it, and tell us what you need. Let’s build the data infrastructure for the AI era together.

- GitHub: github.com/oceanbase/seekdb

- Docs & Demos: www.oceanbase.ai/docs